Will AI fall short of hyped expectations just like predictive analytics did?

Similarities and differences of two of the most hyped tech advances of the last decades

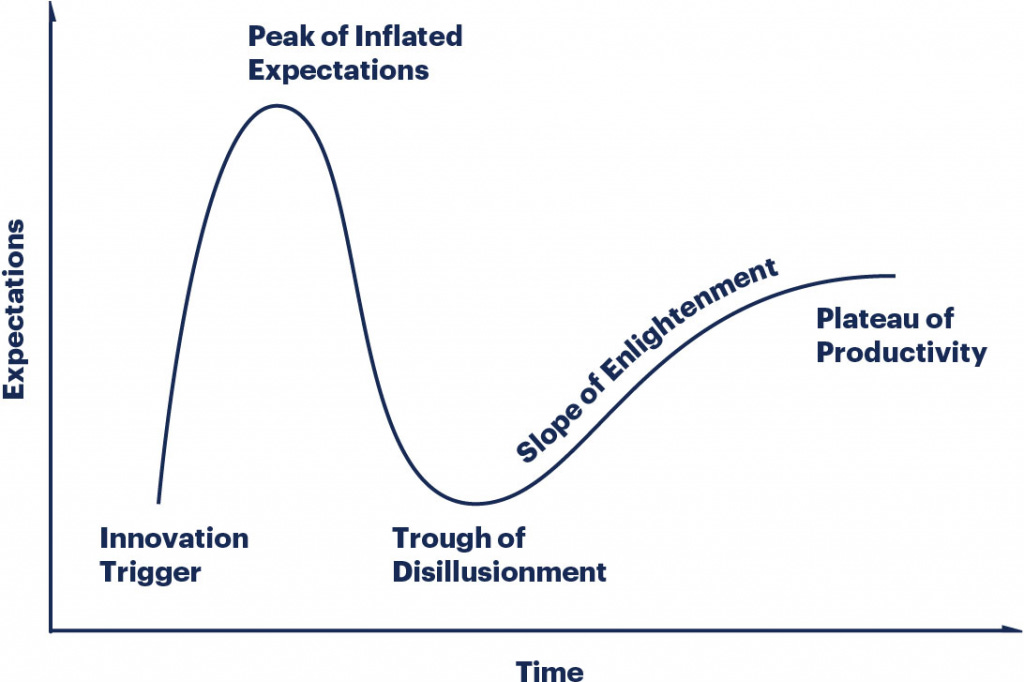

Hype cycles come and go. We are aware of that. But what remains when the hype is gone? The theory suggests that after we fall from the peak of inflated expectations to the trough of disillusionment we will climb the slope of enlightenment to reach the plateau of productivity. But do we? Some hypes seem to simply disappear or never fully reach the plateau of productivity.

So AI is very much one of the peaking hypes at the moment. And I am wondering how the hype will move forward. As techno-optimist I am … well optimistic, but I wonder if it will face the same challenges as its parents “Big Data” and “Predictive Analytics”. Paradoxically both of them are foundational to the rise of AI but never have seen the wide-spread adoption that was heralded just a decade ago.

Back then data was no longer just records in a database, it was the oil of the information age. In fact, we no longer talked about data in a database but about big data and data lakes. And there were a lot of impressive case studies to kick things off. Target was able to detect pregnancies of customers earlier than the customer’s relatives. And yes, Spotify and Netflix seem to understand my preferences. But outside of that? My car breaks down, but nobody told me in advance. My portfolio crashed and nobody saw it coming.

The inside view in most companies is just as dire as the customer experience. We pay mostly lip service for data-driven decision-making. We use data only to the extent it is easily available to us, handle it with the knowledge of a graduating high school student, and are willing to dismiss all insights as soon as it doesn’t fit our preconceived opinions. So to me, it feels like the data hype fell short. We have seen impressive glimpse of what it can do, but it isn’t the ubiquitous all-star it should have been.

It’s ironic that “big data” and predictive analytics came together for an impressive demonstration of what models that were trained on huge amounts of data can achieve to produce LLMs that became the poster-child driver of the AI-hype.

So since the same buzzword-slinging consultants that told us about being data-driven about years ago are now promising the AI-driven future, what makes us believe that this hype might be different?

A set of inherited issues: Access, quality and trust

There are three reasons why data analytics fell short. It is the lack of access, lack of quality and lack of trust.

Analytics and AI needs access to data. At minimum read access to use available information, at best write access to actually take action. For most companies, especially big ones, it’s not like the data does not exist. It’s just not accessible to everyone who needs it. It’s stowed away in excel sheets, presentations on local drives and on-premise systems with no proper interface. On top of that corporations like to slap a layer of data governance that makes it impossible to get the permissions.

The attempt to simply aggregate all data in a single location (those data lakes) was more like a careless dumping of data, turning the data lake into a data dumpster. An entire generation of enthusiastic data scientists were turned into frustrated data administrators whose main job was fixing messy data instead of sophisticated data models. “Shit in, shit out” was the tagline of this realization.

Lack of quality also fueled the lack of trust in the insights derived from data. It is one of the easiest plays in the book of power: If you are challenged with a conclusion you don’t like, you question the quality of the data it was made upon. Besides the quality issues, we also face a lot of common biases: The confirmation bias (this doesn’t match my personal observations), the control illusion (I feel safer when I make decisions not a computer) and a lot of biases that make it almost impossible to feel comfortable with probabilistic thinking (e.g. loss aversion).

These issues crippled the majority of the data initiatives in most companies and it will also cripple AI.

Let’s look at fictional case of a trouser company. The trouser company CEO loves AI and wants to start off with its sales department automating all out-reach sales calls. Theoretically, this is possible and I heard of companies that made it work: An AI automatically calls leads and tries to capture orders from those customers.

In practice, this is where it becomes difficult:

The company uses an old CRM system, hosted on-premise on some servers in the basement. It doesn’t have an API that you could use to actually get and update the leads that the AI is calling. (access)

After updating the CRM (costings lots of time and money) you quickly find out that the data quality sucks. In theory you should have all relevant customer data linked in it but this is not the case. You feel like the CRM is handled mostly by monkeys and your determination to fix the quality issue feels like the saga of Sisyphus. (quality)

A review of your logs shows that customers often ask questions about your product portfolio and other reference customers. You try to gather all relevant data in single place to make it accessible for the AI (of course it is not accessible yet). No matter what you do, the same monkeys that mess up your CRM are also tasked to keep all product-relevant data up-to-date. You feel like you are almost there, when legal calls to talk about your loose handle on data privacy and reminds you about the strict sharing rules on a need-to-know basis. (access + quality + trust)

An odd couple of sales and product managers that used to hate their guts surprisingly agree, for the first time: After monitoring the logs they conclude that the AI fails to grasp the nuances of trousers and trouser sales. High potential customers are no longer called by AI (trust)

On the 100th call, the AI makes a false promise to the customer. The following attempt to right the wrongs leads to the deflection of the valued customer. As a consequence it is now mandatory that every AI call is supervised in real-time by a human coworker who can intervene. This basically nullifies the productivity gain from AI in this use case but I guess we are “just not there yet”. Nobody dares to say that some colleagues made false promises to customers before as well. (trust)

A quick measure shows that skimming the summary of the sales call and then manually placing the order almost takes up as much time as the not AI call, during which the sellers manually placed the order in the ERP system. The fix to this would be to enable the AI to place the order directly in the ERP-system which, you guessed it, is an on-premise system with no API. It’s also managed by another department and the lead is not shy to announce that letting an AI manipulate data in the ERP is suicide. (access, trust)

This is of course a caricatural narrative, but I bet that it feels oddly familiar in case you work in a large corporation or an older small or medium enterprise. The use case quickly turns from a disruptive change for the better into a minor use case with a questionable ROI. Just looking at that, I would say AI is about to follow in the footsteps of his parents: Some impressive B2B use cases that a mere anecdotes in the grand scheme of things. But there is a slight difference.

The slight difference: AI as your daily companion

I am part of a generation with childhood memories of screeching dial-in modems. In this first wave of the consumer internet I fondly remember writing my first email.

I also remember the first question that came up after I wrote the email: Did it work?

So I called my friend, who I just wrote an email to, to tell him to check his mail. Same goes for the first mails with attachment. Later on mails with attachments larger than a couple of MBs (mailboxes tended to constantly run into limits as you had like 20MB max space available). There was doubt that it works until there wasn’t. Technical miracles turned into technical commodities since then: Who doesn’t read their texts within a few hours?!

Just as the messaging became normal in our private lives first and extended to business second (think Slack and Teams), AI has a chance to pull of the same. Even though it faces the same challenges as Data and Analytics to actually gain traction in most business cases, unlike its parents, it has individual and personal use cases right out the gate.

I use AI for technical or professional questions in case I am stuck or want a quick second opinion. Easy research tasks with public or “private” sources are an absolute no brainer. Sometimes some more creative brainstorming, some text optimisations some structuring to messy thoughts - AI has become a valued companion in my daily work and life. I even use it to determine flower types when I am on hike with my 5 year old.

All of this makes me comfortable with AI and probably also more tolerable for its short comings. Although I remain disappointed in some use cases, it is doing a fantastic job in others.

These experiences others and I are making on a daily basis could be enough to increase trust in this new technology and strengthen the resolve to finally tackle the access and quality issues that will block the most exciting use cases.

I am also aware that I am part of a tech-bubble. So it would be proposterous to assume that my early adoption of AI is shared by most knowledge workers across industries or even across all professions.

We are not there yet, but there is a good chance that we will become increasingly trusting in what AI can do and increasingly tolerable for its shortcomings.

I'm actually planning to write 1-3 articles (depending on how long article prime ends up being) with an in-depth, nuanced take on AI. I plan to argue both sides of the debate with equal fervor.

Why? Because AI is one of the most helpful tools I've come across with relation to mental and physical health. It has found through-lines through issues I've been suffering from for years, where Western doctors have given up on me. And for mental health? Those who dare to speak to a machine (I can name myself and five or more friends) about their problems have found huge help. I've had breakthroughs in about 7 different areas of life, while the mental health "professionals" in my country have tried to dope me up or outright ghosted and dismissed me.

That said, the ethics behind the creators and the effect on our planet are real, terrifying concerns that we should all be very aware of. I had an abysmal experience with OpenAI's support that left me with a rather foul taste in my mouth.

So I appreciate you writing this and not just screaming "FUCK AI" into the void. We definitely need more nuanced discussions on this subject than blanket support and blanket condemnation.